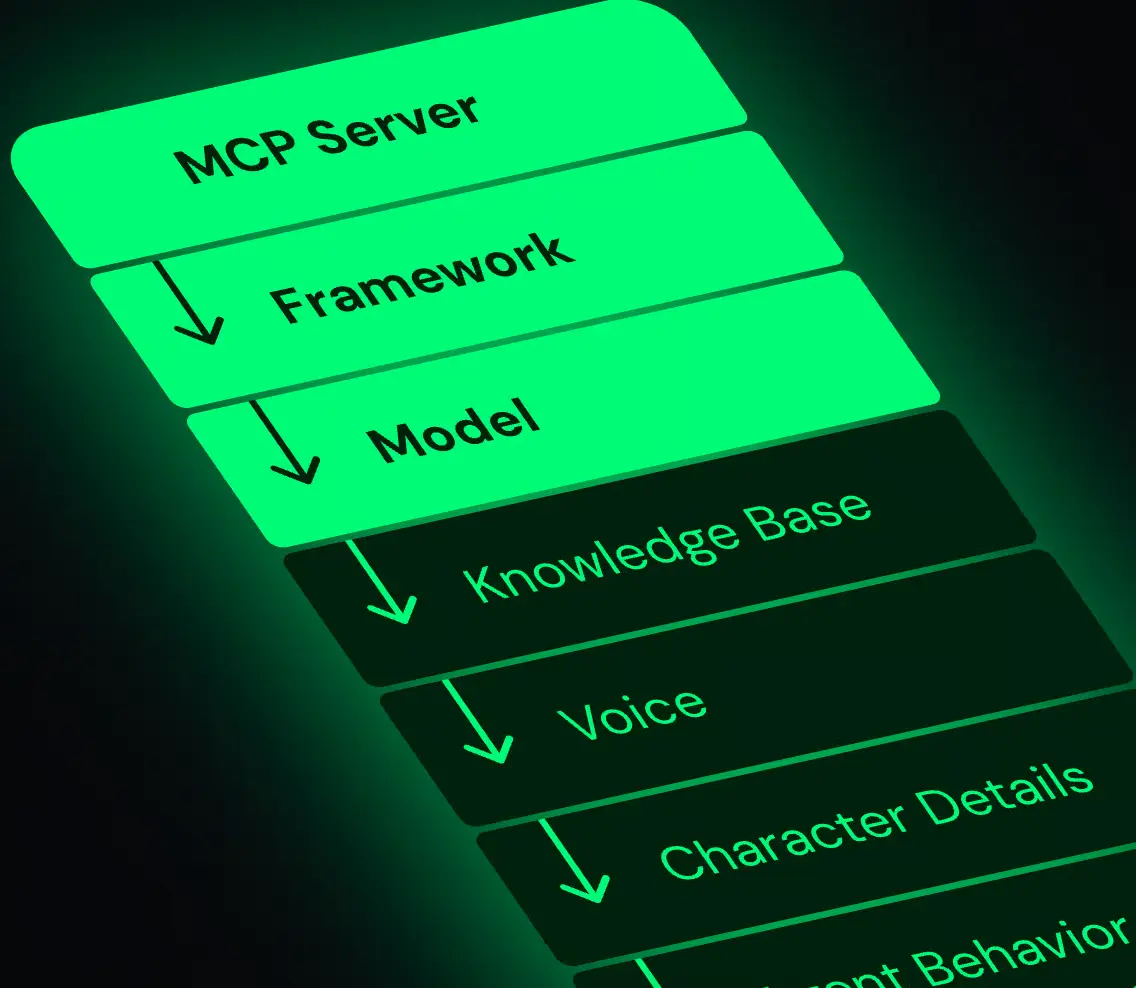

Foundations

MCP

The Model Coordination Protocol (MCP) is a foundational component of our infrastructure. It orchestrates structured communication, fallback handling, and model routing across agents, endpoints, and services.

MCP acts as the control plane for deciding:

- Which models to run

- In what order

- Under what conditions

- How to post-process and validate their outputs

What is MCP?

MCP is a stateless, lightweight coordination layer that abstracts backend complexity while allowing developers fine-grained control over execution paths.

Key Responsibilities:

- Model selection based on input metadata and context

- Fallback orchestration between prioritized models

- Pre-/Post-processing hooks (e.g., prompt rewriting, schema validation)

- Cross-agent communication via structured message passing

Protocol Anatomy

Each MCP execution cycle includes:

1. Intent Dispatch

An agent or app sends a structured intent containing:

- Action type

- Input payload

- Execution context

2. Model Matching

Candidate models are scored using heuristics like:

- Confidence thresholds

- Current availability

- Latency cost

3. Execution Routing

MCP invokes the best-fit model. On failure or policy breach, it:

- Rewrites prompt (if needed)

- Falls back to alternate models

4. Post-Processing

MCP runs output through:

- Pydantic AI for type validation

- Format normalization

- Retry logic (if enabled)

Integration Points

MCP integrates with:

- Agent2Agent (A2A) Protocol – for agent-level handoffs

- TensorOne GraphQL API – to trigger endpoint calls

- Prompt Rewrite Engine – dynamic prompt transformation

- Model Metrics Collector – logs success/failure and latency metrics

Benefits of Using MCP

| Feature | Description |

|---|---|

| Reliability | Structured fallback for every run |

| Modularity | Swap models without touching orchestration logic |

| Security | Use hooks for sanitization and filtering |

| Observability | Detailed logs and execution metrics |

Example Use Case

A user query is routed by an agent:

"intent": "qa.classify",

"input": "What are the main risks of using synthetic datasets?",

"priority": ["gpt-4", "claude-3", "internal-benchmark"],

"max_retries": 2

MCP execution:

- Sends the query to gpt-4

- Validates output schema

- On error, retries with claude-3

- Responds to the agent with final output and metadata

Future Additions

We are extending MCP with:

- Context-aware model switching (based on dialogue memory)

- Semantic caching to skip redundant inferences

- Zero-trust execution policies

- Redundant endpoint load balancing