AI Agents & Tools

GPU Framework

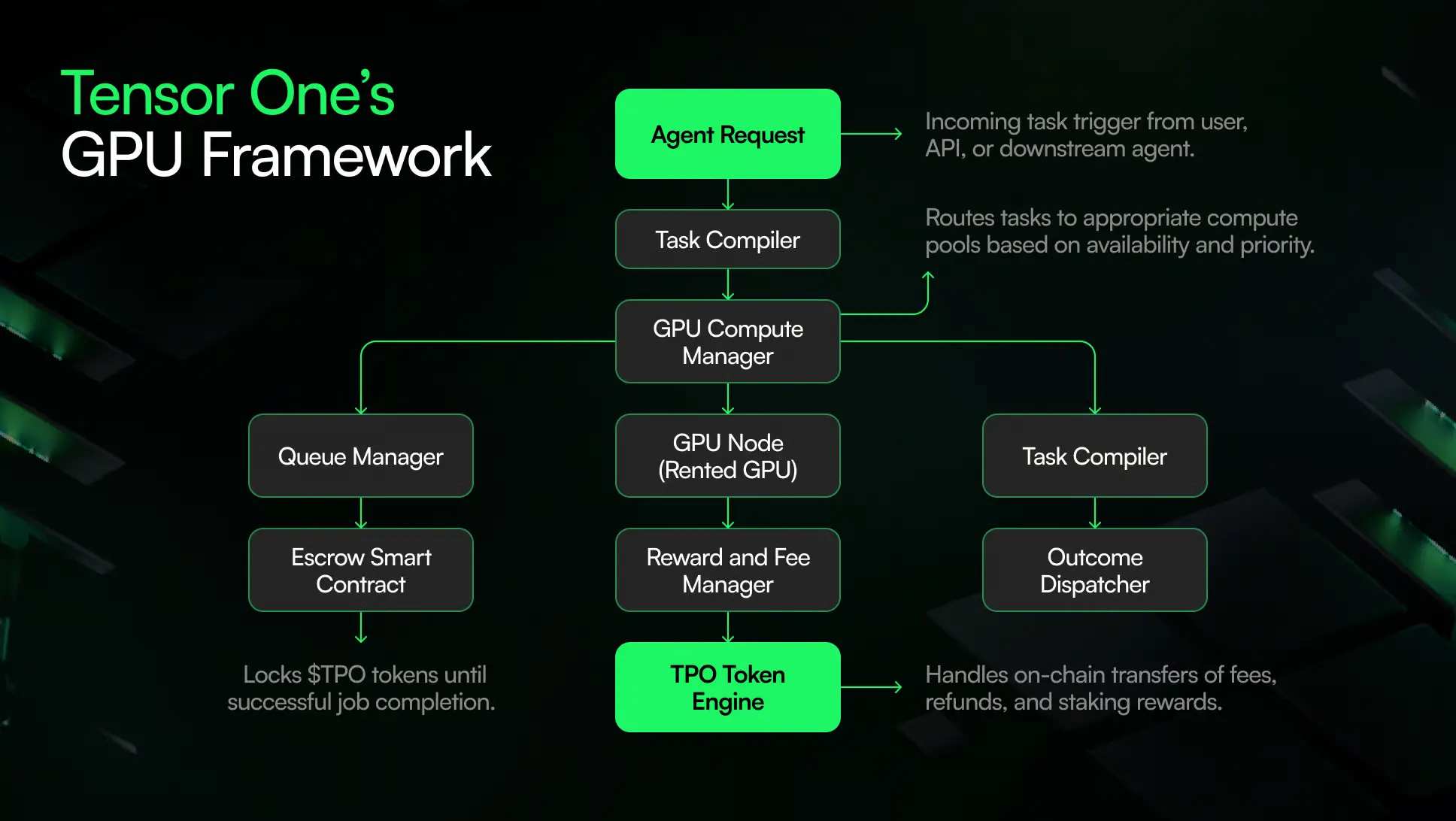

Tensor One’s GPU Framework

Distributed GPU resources are used to handle computation tasks, which are managed by the GPU Framework. Requests from users or agents are received, converted into executable tasks, and then routed to available GPU nodes.

The GPU Compute Manager receives the task and, depending on availability, load, and priority, decides which compute pool to route it to. To guarantee equitable use and safeguard resource providers, the task is queued before being executed, and a smart contract locks $TPO tokens as collateral.

The task is run on a GPU node after it has been queued. Once finished, the results are checked and recorded before being forwarded to the Outcome Dispatcher for distribution to the requester or the agent further down the line. The Reward and Fee Manager computes payouts, refunds, or staking rewards simultaneously.

The TPO Token Engine manages on-chain transfers, such as compute fees, escrow releases, and incentive distributions, guaranteeing a trustworthy and transparent token flow connected to actual compute activity.

This framework provides permissionless, programmable, and verifiable access to decentralised GPU compute for agents and developers.