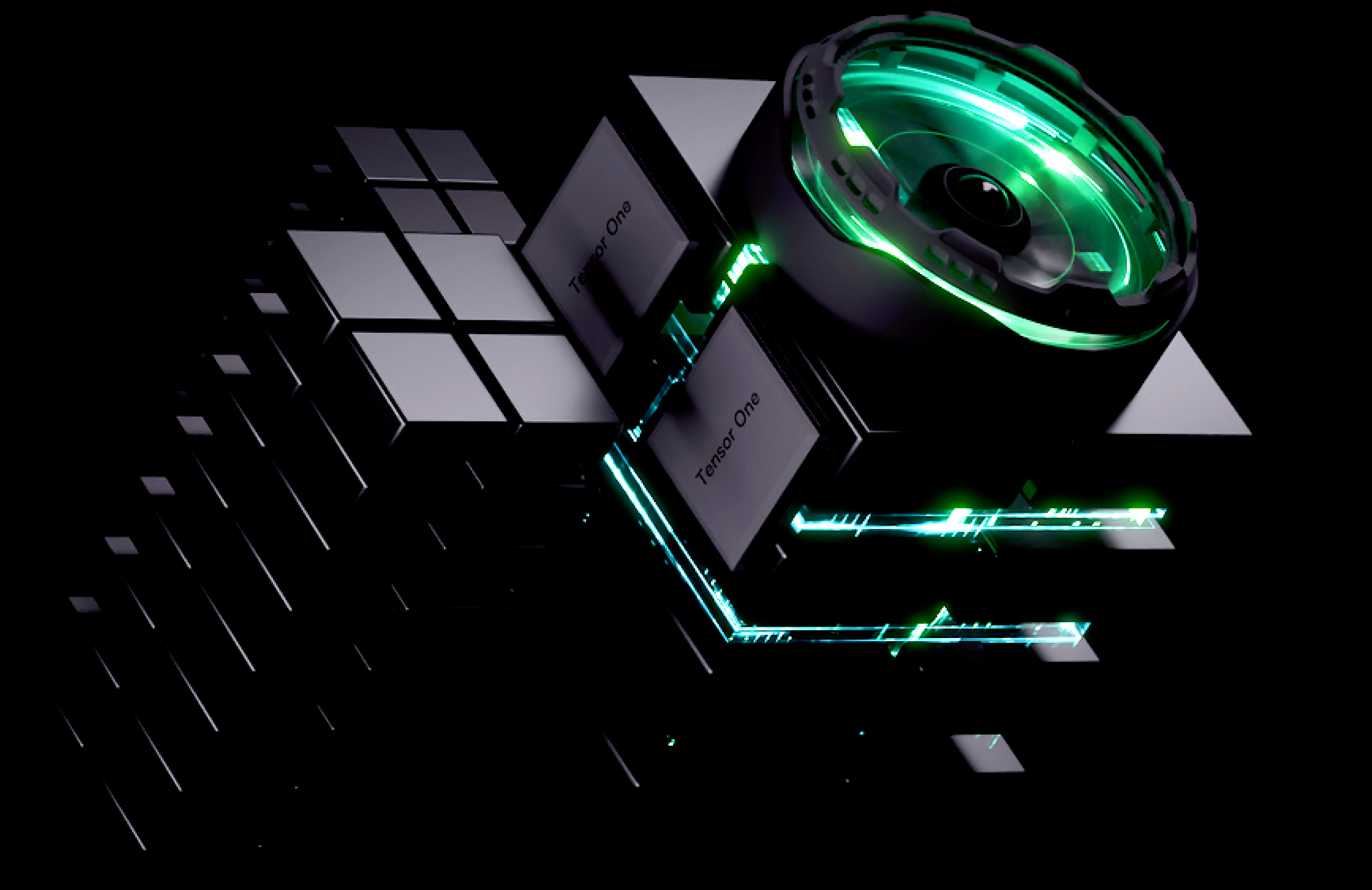

Core Technologies

Hypervisor VMM

hypervisor-vmm: GPU Virtualization Engine for TensorOne.

hypervisor-vmm is the low-level engine that powers TensorOne’s GPU Virtual Private Servers (VPS). It abstracts bare-metal GPU instances into scalable, isolated compute environments optimized for high-throughput machine learning (ML), AI inference, and containerized workloads.

What is a Virtual Machine Monitor (VMM)?

A Virtual Machine Monitor (VMM), or hypervisor, is a lightweight, high-efficiency execution layer responsible for:

- Virtualizing hardware-level GPU access

- Isolating cluster workloads

- Enforcing multi-tenant execution safety

- Enabling container-to-GPU passthrough

Unlike traditional hypervisors, hypervisor-vmm is container-native, optimized for Ubuntu-based containers, direct NVLink passthrough, and near-zero Docker image mounting overhead.

Key Technologies

GPU Passthrough

TensorOne clusters use real NVIDIA GPUs (e.g., A100, RTX 3090, A6000) via direct PCIe passthrough:

[ Container ] ↔ [ hypervisor-vmm ] ↔ [ PCIe → Physical GPU ]

This configuration ensures:

- Full CUDA compatibility

- Maximum VRAM access

- Compatibility with ML libraries (e.g., PyTorch, TensorFlow)

- Real-time metrics: memory, utilization, temperature

Dynamic Resource Mapping

Each cluster is provisioned with a dynamic profile:

- vCPU: Dedicated logical CPUs

- RAM: DDR5 slices with bandwidth isolation

- Storage:

- Ephemeral Container Disk (non-persistent, fast I/O)

- Persistent Volume (durable, reboot-safe)

Resources can be dynamically scaled via the GraphQL API or tensoronecli.

Secure Multi-Tenant Scheduling

To enforce secure, isolated execution across shared infrastructure:

- AppArmor & Seccomp enforcement per container

- Encrypted TLS proxy communication

- Automatic idle timeouts & sandboxing

This allows multiple public endpoints to run safely on shared GPU hosts.

Boot Flow Architecture

graph TD

A[Project Template] --> B[hypervisor-vmm]

B --> C[Container Provisioning]

C --> D[GPU & Disk Binding]

D --> E[Runtime Session]

E --> F[Web Proxy / Serverless Endpoint]

Each project deployment initializes from a template, attaches to physical GPU/disk resources via hypervisor-vmm, and is then exposed via runtime proxies.

Developer-Facing Interfaces

You can control hypervisor-backed compute environments through:

GraphQL SDK:

clusterFindAndDeployOnDemandclusterRentInterruptable

TensorOne CLI:

tensoronecli create clusterstensoronecli start cluster

Environment Variables:

TENSORONE_CLUSTER_IDTENSORONE_API_KEY

Optimized for Machine Learning

The hypervisor-vmm is designed for ML workflows:

- Optimized disk I/O for rapid model loading

- NVLink-enabled for multi-GPU workloads

- Integrated with endpoint auto-scalers

Try it Out

Launch a development cluster using the CLI:

tensoronecli create clusters \

--gpuType "NVIDIA A100" \

--imageName "tensorone/llm-starter" \

--containerDiskSize 20 \

--volumeSize 40 \

--mem 32 \

--args "python run.py"

For more details, refer to the Managing Clusters and GraphQL Configuration Reference